Kafka the MicroServices Framework – An Overview

- Technology Blog August 22,2023

Apache Kafka is developed to fluently transfer data from one server to other. It transfers data with high aptitude dependent to the sent data configuration as well as receipt verification. Kafka is also known as a protocol i.e. TCP. This protocol activates at the layer of OSI model known as the transport layer.

Initially, Kafka was designed by LinkedIn and nowadays it is an Apache open source product. Different reputable organizations use it to read data feeds and organize it according to the topics.

It could be also considered as the replacement of messaging tools i.e. ActiveMQ. Nowadays it is rapidly becoming the popular open source tool that scales up without limitation. Kafka data could be output to a data warehouse for reporting and analysis.

A wide range of organizations including Pinterest uses Kafka data for stream processing. It allows continues data streaming and keeps the data loading. This data never stops streaming on scrolling down.

Why we Use Kafka?

Kafka is an open source, saleable, fault-tolerant and fast messaging system. Due to its reliability, it is in the replacement of traditional messenger.

It is used for the general-purpose messengers in different scenarios. These are the consequences when horizontal scalability and reliable delivery are the most important requirements. The common use cases of Kafka are:

- Website Activity Monitoring

- Stream Processing

- Log Aggregation

- Metrics Collection

How Kafka Works?

Kafka system is designed as the circulated commit log. The incoming data is well written consecutively to disk. There are 4 major components including in transformed data in Kafka.

-

Broker

Brokers are the Kafka clusters that consist of one or more servers. They replication of duplication of message data.

-

Topic

The topic is a well-defined category by the user to which the messages are distributed.

-

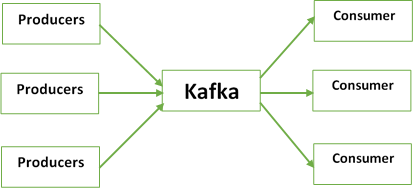

Consumer

Consumers are the topic, process and published message subscribers.

-

Producers

Producers are the data publishers to one and more topics.

Kafka’s highest performance is dependent on the broker’s accountability. The topic consists of more ordered partitions that are unchangeable sequences of messages. Another important factor that enhances Kafka’s performance is:

The broker is not responsible to keep a track of consumed messages. Tracking consumed messages is the responsibility of the consumer. System’s scalability was dependent on increased consumers. Kafka allows the consumers to track whether the message has been processed.

What Are the Latest Kafka Developments?

- It enables rack awareness. It supports in increasing the availability and flexibility.

- Message timespan is another development. It easily indicates the actual time of message creation.

- SASL enhancements that include the external server authentications as well as the support of multiple SASL validations to a single

Apache Kafka Security

Apache Kafka enables the back-end to share real-time data with the support of Kafka topics. The standard setup allows the application or the user to write their message to any topic and read it from any topic.

As the organization is moving with multiple applications and the teams they use Kafka cluster. Here in order to stop Kafka cluster from onboarding confidential information, security implementation is essentially required.

Major Security Components

Three major components of Kafka Security are:

-

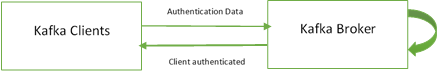

SSL/SASL Authentication

SSL/SASL authentication allows the consumers and the producers to validate the Kafka cluster. It authenticates the identity. This is a secure approach that empowers the clients to recommend an identity. This is required for security authorization.

-

ACLs Authentications

Once the clients are verified, the Kafka brokers may easily execute them against the access control lists (ACL). It supports in determining whether some particular client would be authorized to read & write to some topic or not. It needs to decide what actually the client can and can’t perform.

They support you in preventing the disasters. Let’s have an example of any topic that is required to be writeable from a single subset of the host.

You wanted to avoid the average user from writing to that specific topic. ACLs authentication support such prevention. They work great in case of sensitive data. The limited application will be able to access and write.

-

TLS/SSL Data Encryption

TLS/SSL empowers the data to be encrypted among the Kafka – producers and the consumers – Kafka. This pattern is commonly used by almost everyone while moving to the web. This encryption is actually the “S” of HTTPS. It ensures the data security.

Final Thoughts

So, that was the overview of Kafka, to improve your understanding regarding the basic features. It could be used within the infrastructure. It works even in the unavailability of any sort of analytics. It conveniently supports the management.

Saudi Arabia (English)

Saudi Arabia (English) United Kingdom

United Kingdom Global Site

Global Site